Concurrent data structures are designed to handle simultaneous access and modification by multiple threads or processes in a concurrent computing environment. In such environments, where multiple tasks or threads are executed in parallel, traditional data structures might encounter issues like race conditions, deadlocks, and data corruption if not appropriately managed. Concurrent data structures aim to address these challenges and allow multiple threads to work with the data structure concurrently with correctness.

The main objectives of concurrent data structures include:

Thread Safety: Concurrent data structures are engineered to ensure that operations can be safely performed by multiple threads concurrently without leading to inconsistencies or errors. This involves synchronization mechanisms to manage access to the data structure’s internal state.

High Concurrency: These data structures are optimized to allow a high level of parallelism, enabling multiple threads to perform operations simultaneously whenever possible. This improves the program’s overall efficiency in a multi-core or multi-processor environment.

Minimal Blocking: Traditional synchronization mechanisms like locks can cause threads to block while waiting for access to a resource. Concurrent data structures often employ techniques like lock-free or wait-free algorithms to minimize blocking and contention, allowing threads to progress even if others are stalled.

Scalability: As the number of threads or processes increases, concurrent data structures should be able to handle the increased load without suffering from bottlenecks or significant performance degradation.

Common types of concurrent data structures include:

Concurrent Linked Lists: These are versions of linked lists adapted for concurrent access. Lock-free or non-blocking implementations ensure that insertions, deletions, and traversals can be performed concurrently without causing conflicts.

Concurrent Hash Tables: Concurrent hash tables allow multiple threads to access and modify entries concurrently. Techniques like fine-grained locking or lock-free approaches are used to manage access to individual buckets or entries.

Concurrent Queues and Stacks: These data structures provide thread-safe mechanisms for adding and removing elements concurrently. Lock-free queues, such as Michael-Scott queues, are commonly used for scenarios where multiple threads need to enqueue and dequeue items.

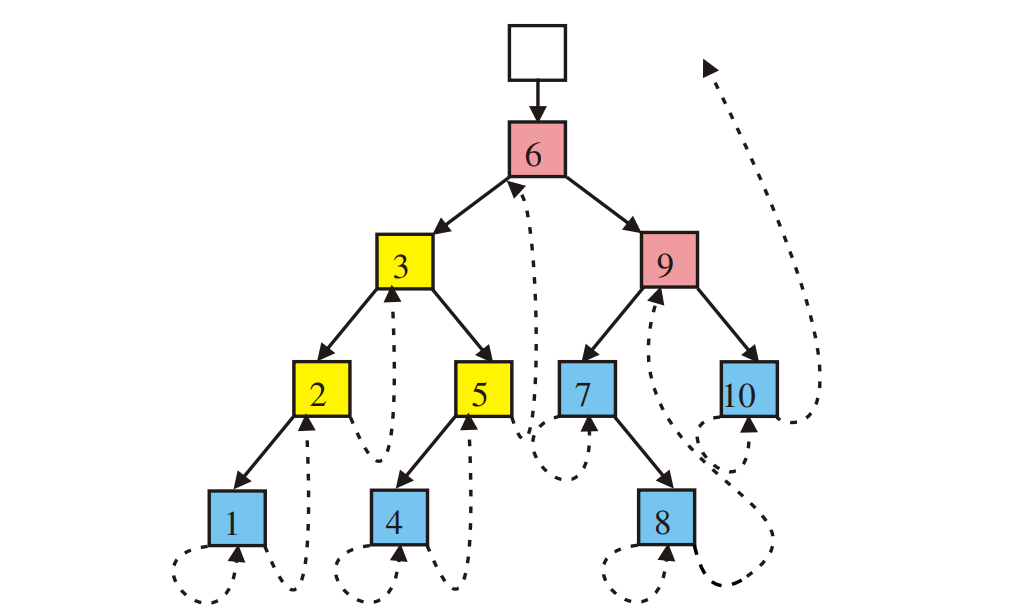

Concurrent Trees: Concurrent versions of trees, such as binary search trees and AVL trees, are designed to enable concurrent insertion, deletion, and searching operations without causing conflicts or inconsistencies.

Concurrent Sets and Maps: These data structures provide thread-safe ways to manage sets and key-value pairs. They use partitioning, sharding, or lock-free strategies to handle concurrent operations, among other techniques.

Implementing effective concurrent data structures requires a deep understanding of concurrency principles, memory ordering, and synchronization mechanisms. While they offer improved performance in multi-threaded scenarios, designing and debugging concurrent data structures can be complex due to the intricacies of managing concurrent access and ensuring correctness.